Publications

Evaluating Large Language Model Biases in Persona-Steered Generation

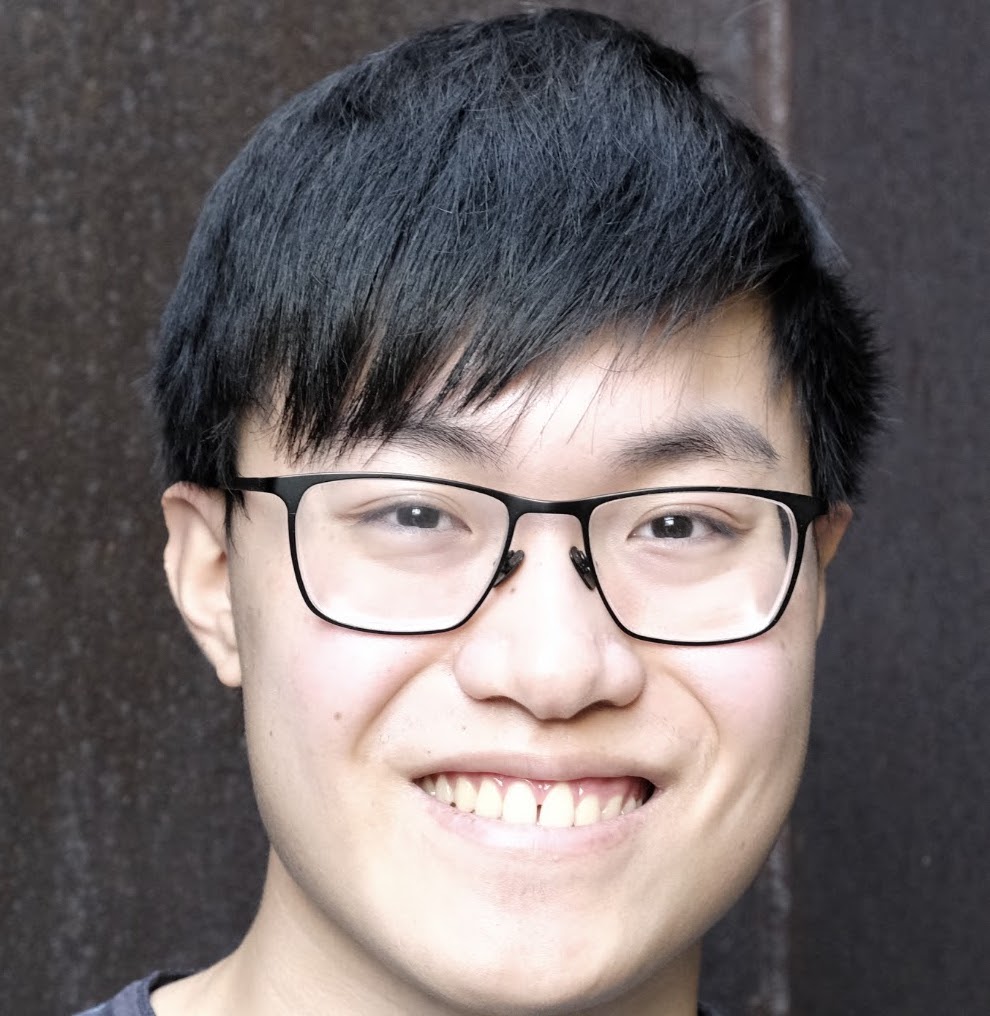

Andy Liu, Mona Diab, Daniel Fried

In Findings of the Association for Computational Linguistics: ACL 2024. [pdf] [code]

We study the task of persona-steered text generation, where models must generate text that reflects the distribution of views that an individual fitting a persona could have. We find models are worse at representing multifaceted personas whose dimensions are incongruous with each other, and that preference-based fine-tuning improves LLM steerability at the cost of diversity.

Computational Language Acquisition with Theory of Mind

Andy Liu, Emmy Liu, Hao Zhu, Yonatan Bisk, Graham Neubig

In The Eleventh International Conference on Learning Representations, 2023. [pdf] [code]

We equip language-learning agents with theory of mind, operationalized as an internal model of a teacher agent that is trained alongside the learner. We find that both including ToM and increasing environment difficulty lead to improved language acquisition in an image referential game setting.